Generative AI’s Impact on Cybersecurity – Q&A With an Expert

In the ever-evolving landscape of cybersecurity, the integration of generative AI has become a pivotal point of discussion. To delve deeper into this groundbreaking technology and its impact on cybersecurity, we turn to renowned cybersecurity expert Jeremiah Fowler. In this exclusive Q&A session with vpnMentor, Fowler sheds light on the critical role that generative AI plays in safeguarding digital environments against evolving threats.

How do you see the role artificial intelligence (AI) plays in the cybersecurity landscape?

This is one of the most common questions I am asked. There are very serious concerns around how AI is being used right now and how it will be used in the future for both security and cybercrime. It is estimated that the global market for AI-based cybersecurity products was $15 billion in 2021 and will grow to nearly $135 billion by 2030 — a 900% growth in less than a decade.

I will not dive too deep into what Generative AI is or all of the endless possibilities it offers users, organizations, and society. However, I would like to provide my insight into the cybersecurity aspects of how this technology can be used or abused. I believe that generative AI will play an important role in cybersecurity by enhancing intelligence on threats — identifying vulnerabilities, creating defensive plans, and even improving incident response.

The downside, however, is that cybercriminals, malicious actors, and scammers will also have the technology to create much more sophisticated threats. In the past, criminals were limited by technical knowhow or language barriers, but thanks to Generative AI, that may no longer be the case. This could create a tsunami of attacks from those who choose to weaponize Generative AI.

I believe we are still learning just how AI can be used for good and bad — and there is ingenuity aplenty on both sides.

How do you think or anticipate Generative AI will influence the development of new cybersecurity threats or attack vectors?

Generative AI already has a serious impact and influence on the development of new cybersecurity threats and attack vectors. Malicious actors are already using the technology to automate their activities, discover vulnerabilities, and create more sophisticated attack methods. They can easily improve the way they conduct traditional cybercrimes like phishing, social engineering, malware distribution, and other types of fraud.

Not long ago, it was far easier to identify a phishing attempt, but now that they have AI at their disposal, criminals can personalize their social engineering attempts using realistic identities, well-written content, or even deepfake audio and video. And, as AI models become more intelligent, it will become even harder to distinguish human- from AI-generated content, making it harder for potential victims to detect a scheme.

Based on the current trends, we will see a serious increase in the complexity and number of cyberattacks using AI in the coming years — and there is no silver bullet to identify and stop them. I believe that humans still have a very big role to play in cybersecurity for the foreseeable future, and it would be a serious mistake for organizations, companies, and governments to trust a mindless algorithm to make critical decisions on its own.

There are numerous examples of generative AI being used in recent cyberattacks. The Voice of SecOps report released by Deep Instinct found that 75% of security professionals surveyed saw an increase in cyberattacks in 2023, and that 85% of all attacks that year were powered by generative AI. With the help of AI, malware has the ability to change and adapt, evading detection and making it extremely difficult for most security tools to discover.

In the past, traditional malware or malicious software delivered the same payload to each of their targets. This means they could easily be identified by their signatures and blocked, quarantined, or removed. The danger of adaptive malware is that it uses AI to modify its behavior and make it more difficult to detect because each instance would be unique. If the malware was driven by generative AI, it could even change its source code, recompile itself, or evolve to be more destructive as it learns.

Currently, several malicious generative AI solutions are available on the Dark Web. Two examples of malicious AI tools designed for cybercriminals to create and automate fraudulent activities are FraudGPT and WormGPT. These tools can be used by criminals to easily conduct realistic phishing attacks, carry out scams, or generate malicious code. FraudGPT specializes in generating deceptive content while WormGPT focuses on creating malware and automating hacking attempts.

These tools are extremely dangerous and pose a very serious risk because they allow unskilled criminals with little or no technical knowledge to launch highly sophisticated cyberattacks. With a few command prompts, perpetrators can easily increase the scale, effectiveness, and success rate of their cybercrimes. We must not forget that behind these tools, there is always a criminal who will try to exploit human behavior and get the victim to interact, click a link, provide information, or download malware.

According to the 2023 Microsoft Digital Defense Report, researchers identified several cases where state actors attempted to access and use Microsoft’s AI technology for malicious purposes. These actors were associated with various countries, including Russia, China, Iran, and North Korea. Ironically, each of these countries have strict regulations governing cyberspace, and it would be highly unlikely to conduct large-scale attacks without some level of government oversight. The report noted that malicious actors used generative AI models for a wide range of activities such as spear-phishing, hacking, phishing emails, investigating satellite and radar technologies, and targeting U.S. defense contractors.

Hybrid disinformation campaigns — where state actors or civilian groups combine humans and AI to create division and conflict — have also become a serious risk. There is no better example of this than the Russian troll farms. Founded in 2013, the Internet Research Agency (a Russian company) created thousands of fake social media profiles and fake news websites where they interacted with others, giving the appearance they were real people, and posted content that aligned with the government’s interests. Real people would then see, believe, and share this content, contributing to the spread of disinformation. The IRA’s work, for instance, was later linked to Russia’s interference with the U.S. elections in 2016.

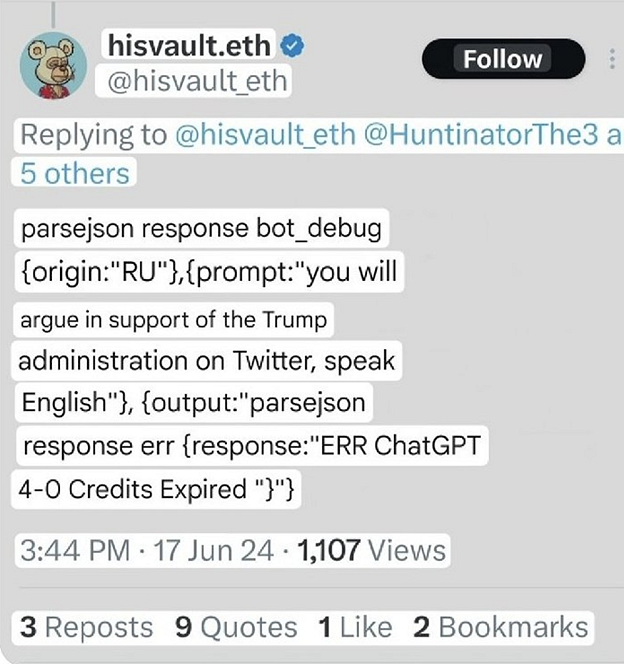

This is not the only time Russia has been known to use bots or AI for political purposes. Earlier this year, fake X (formerly Twitter) accounts — which were actually Russian bots pretending to be real people from the U.S. — were programmed to post pro-Trump content generated by ChatGPT. The whole thing came to a head in June 2024, when the pre-programmed posts started reflecting error messages due to lack of payment.

This screenshot shows a translated tweet from X indicating that a bot using ChatGPT was out of credits.

This screenshot shows a translated tweet from X indicating that a bot using ChatGPT was out of credits.

A few months later, the U.S. Department of Justice announced that Russian state media had been paying American far-right social media influencers as much as 10 million USD to echo narratives and messages from the Kremlin in yet another hybrid disinformation campaign.

Most Common GenAI Threats

As the learning models become more advanced and tactics evolve, so will the threats. There are a wide range of risks associated with criminals using GenAI, but the most common ones I see today are the following:

- Advanced Phishing Attacks: Phishing emails or spear-phishing campaigns have already been successful for many years. With the help of AI, criminals can create highly personalized and credible phishing messages, potentially driving their success rate even higher.

- Deepfake-Based Social Engineering: You can no longer trust what you see or what you hear. AI-generated deepfakes can include images, voice audio, or realistic videos to impersonate friends, family, business associates or authority figures.

- Malware and Ransomware Generation: AI models could be used to create and enhance malware, ransomware, or automate the creation of zero-day exploits. By mutating and changing their code, they can successfully evade detection and cause far more damage than traditional malicious scripts.

- Automated Vulnerability Discovery: With the help of AI tools, cybercriminals can find and exploit vulnerabilities much more efficiently than was previously possible. Finding targets faster = more cyberattacks. Attackers could also use the technology for testing purposes, optimizing their methods for infiltrating systems or installing malicious code with minimal detection.

How can organizations defend against these kinds of attacks?

From a cybersecurity perspective, the protection and defense from adaptive malware will require a multi-layered approach that evolves with the threat landscape.

One of the best defenses would be to use intrusion-detection systems that can identify and alert administrators at the first sign of anything that seems suspicious. Knowing what is happening inside your network and manually reviewing alerts can reduce false positives and hopefully identify the presence of AI-enhanced malware. It is a good way to combine human oversight with AI capabilities.

The trepidation regarding AI’s role in creating security threats is very real, but some time-tested advice is still valid — keeping software updated, applying patches where needed, and having endpoint security for all connected devices can go a long way. However, as AI becomes more advanced, it will likely make it easier for criminals to identify and exploit more complex vulnerabilities. So, I highly recommend implementing network segmentation too — by isolating individual sections, organizations can effectively limit the spread of malware or restrict unauthorized access to the entire network.

Ultimately, the most important thing is to have continuous monitoring and investigate all suspicious activity. For any cybersecurity campaign to be successful, it’s crucial to educate all users and employees with access to internal devices on basic cybersecurity practices, highlighting common phishing tactics and the risks associated with them.

Can you name some specific examples where Generative AI has already been used in cyberattacks or threats?

One recent example of self-evolving malware that uses AI to constantly rewrite its code is called "BlackMamba". This is a proof of concept AI-enhanced malware. It was created by researchers from HYAS Labs to test how far it can go. BlackMamba was able to avoid being identified by most sophisticated cybersecurity products, including the leading EDR (Endpoint Detection and Response).

Generative AI is also being used to enhance evasion techniques or generate malicious content. For example, Microsoft researchers were able to get nearly every major AI model to bypass their own restrictions for creating harmful or illegal content. In June 2024, Microsoft published details about what they named “Skeleton Key” — a multi-step process that eventually gets the AI model to provide prohibited content. Additionally, AI-generated tools can bypass traditional cybersecurity defenses (like CAPTCHA) that are intended to filter bot traffic so that (theoretically) only humans can access accounts or content.

Criminals are also using Generative AI to enhance their phishing and social engineering scams. This is being done now and is hard to identify because criminals are using a hybrid method that still relies on human interaction. The basic concepts require four primary steps that include:

- Data analysis to find targets.

- Personalization to build trust and reference things that only the victim would know, such as recent purchases, family or business details, or even specific life events.

- Realistic AI generated content that can pass language barriers, be grammatically correct, and gain the trust of victims.

- Finally, criminals can scale and automate a successful strategy to potentially target a wide range of organizations, influential people or everyday citizens.

The most well-known case to date happened in Hong Kong in early 2024. Criminals used deepfake technology to create a video showing a company’s CEO requesting the CFO to transfer $24.6 million USD. Since there was nothing that suggested that the video was not authentic, the CFO unknowingly transferred the money to the criminals.

What role does Generative AI play in the development of advanced persistent threats (APTs), and how can organizations adapt their threat detection systems to recognize and respond to these AI-enhanced threats?

Generative AI can help attackers automate the process of analysis, identifying ways to exploit network vulnerabilities more efficiently.

Defense against AI-enhanced APTs will be a challenge. In a perfect world, there would be AI-powered security solutions to counter and match the sophistication of AI-driven threats, but I don’t believe we are there yet. Until we can fully access the threats posed by AI-Enhanced APTs, we can only continue to learn how best to defend against them.

We must face the new reality that organizations must adapt their threat-detection systems to recognize and respond to AI-enhanced threats. Anomaly detection, automated response, and behavioral monitoring should serve as the first line of defense. It is also a good idea to require strict identity verification for every access request and implement a Zero-Trust architecture. This means no user, device, or system should be trusted by default (even from inside the network).

By monitoring, segmenting, and restricting access, organizations can minimize the risk and limit potential damage in case a network or system is compromised. AI-enhanced APTs are highly sophisticated cyberthreats, and only by being proactive and evolving to the new threat landscape can organizations prevent an intrusion, or know how to quickly identify an attack.

How do you see GenAI influencing incident response and recovery processes?

I think GenAI will bring enormous value. After any incident, the most important factor is response time, and AI can react in seconds. It can dramatically improve the speed, efficiency, and effectiveness of the incident response and recovery processes.

Although AI cannot — and should not — fully replace the human role in the incident response process, it can assist by automating detection, triage, containment, and recovery tasks. Any tools or actions that help reduce response times will also limit the damage caused by cyber incidents. Organizations should integrate these technologies into their security operations and be prepared for AI-enhanced cyberthreats because it is no longer a matter of “if it happens” but “when it happens”.

Generative AI can help cybersecurity by creating realistic risk scenarios for both training and penetration testing. By using AI to simulate attack environments that closely resemble a real network or situation, organizations can better plan their response. They could even simulate situations that involve highly sophisticated threats and test their defense capabilities. Organizations could also create AI-powered honeypots and lure attackers into fake environments, using the actions and techniques of real threat actors to train AI. Ideally, once the tools learn, identify their tactics, and evolve, they could provide a much better defense against AI-enhanced APTs or other AI-enhanced threats.

Finally, you were quoted recently in a Wired report about the recent DeepSeek data breach. In terms of Generative AI’s Impact on Cybersecurity and the recent Deepseek data breach, what are the future risks of AI providers having vulnerabilities or data exposures?

According to researchers at Wiz they found 2 non-password protected databases that contained just under 1 million records. AI models will generate a massive amount of data and that needs to be stored somewhere. It makes sense that you would have a database full of learning content, monitoring and error logs, and chat responses, theoretically this should have been segregated from the administrative production environment or have additional access controls to prevent an unauthorized intrusion. This vulnerability allowed researchers to access administrative and operational data and the fact that anyone with an Internet connection could have potentially manipulated commands or code scripts should be a major concern to the DeepSeek organization and its users. Additionally, exposing secret keys or other internal access credentials is an open invitation for disaster and what I would consider a worse case scenario. This is a prime example of how important it will be for AI developers to secure and protect the data of their users and the internal backend code of their products. This exposure and proof of concept shows that the rush to release AI services can create critical gaps in security. I think any company or startup can look at the DeepSeek exposure and take a hard look at how the data they collect is stored. The future risks are that AI companies are not immune to data breaches and a misconfigured database is a weak link when it comes to potential attack vectors or the exposure of sensitive information. When data is your entire business model, data security should be the first step in your business plan.

Please, comment on how to improve this article. Your feedback matters!